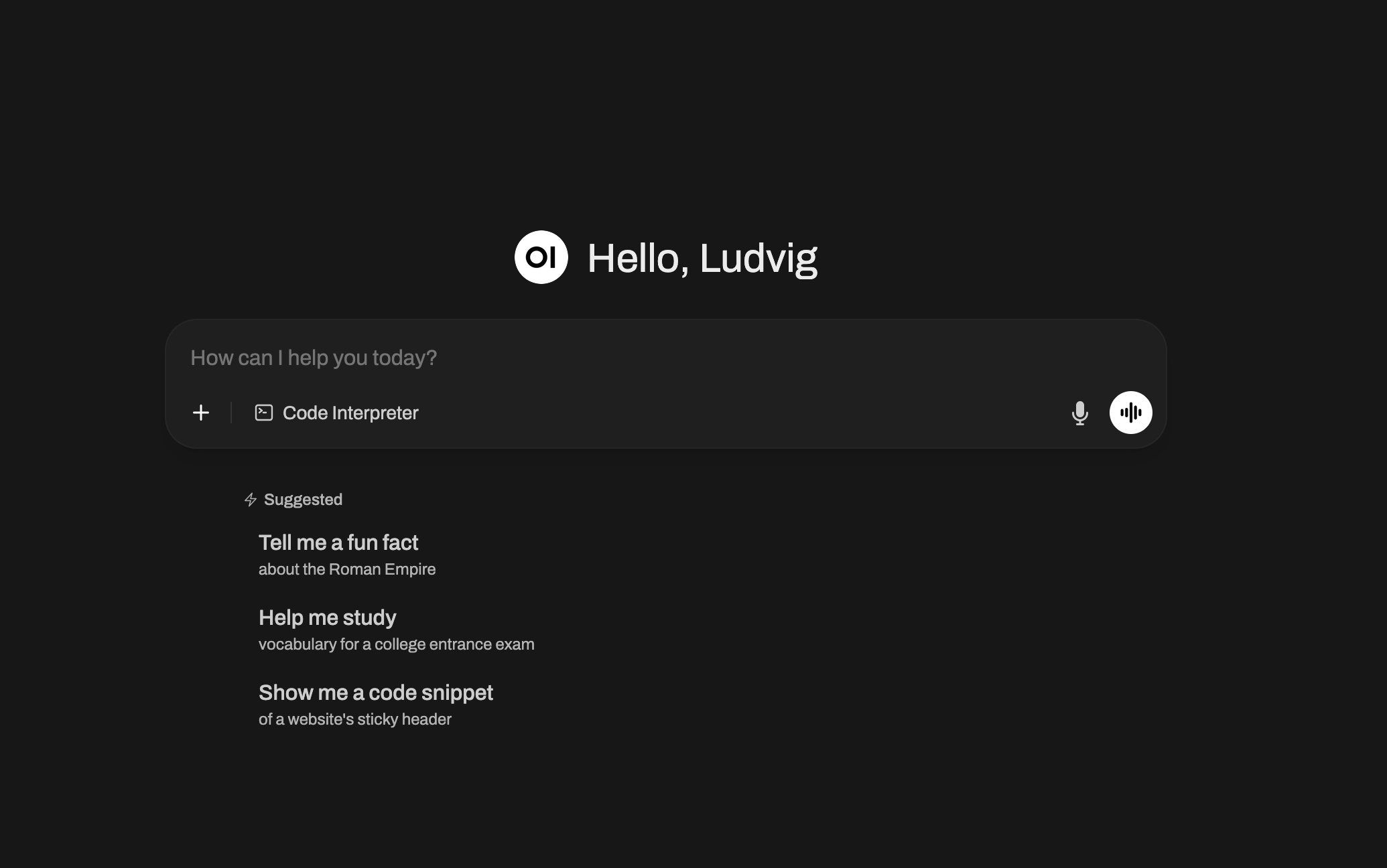

Deploy Your Own ChatUI

With Open WebUI you can run a ChatGPT-style interface fully under your control. Below are two example setups: a quick individual deployment and a more advanced, organization-oriented deployment. These are not the only ways to run a ChatUI—just example prompts. In practice, you can design your system architecture however you like.

Basic Setup: Open WebUI with Provider Key

The most direct way to run Open WebUI is to supply it with a provider API key. This creates a private chat interface that connects straight to your chosen LLM provider.

This setup is best suited for individuals who want a secure, personal ChatGPT instance without additional infrastructure.

Advanced Setup: Open WebUI with LiteLLM Proxy

For teams and organizations, managing API keys directly is not practical or secure. Introducing a LiteLLM proxy between Open WebUI and your providers enables:

- Centralized authentication – provider keys never exposed to end users.

- Model flexibility – route traffic to OpenAI, Anthropic, Azure, or local models through one proxy.

- Monitoring and control – track usage, apply quotas, and manage cost centrally.

- Future-proofing – swap or add providers without changing the frontend.

Our system automatically generates the LiteLLM API key and configures Open WebUI with it:

This configuration gives your team a managed, private ChatGPT that can connect to any language model backend, with security and governance built in.

Summary

- Basic setup – fast and simple, ideal for personal use.

- Advanced setup – recommended for organizations, with centralized control, model flexibility, and secure key management.

- Beyond these examples – there are as many ways to architect your ChatUI system as there are ways to write a prompt. These two setups are simply reference points.